Introducing the Virtual Production R&D Discovery Pilot Winners

Meet our latest cohort of innovators: four creative businesses will spend 3 months developing new ideas in Virtual Production methods in collaboration with academic experts at Royal Holloway, University of London. VP and AI technologies are taking the sector by storm, creating a revolution in how content is made, and requiring new ways of thinking about skills, collaboration methods, workflows and approaches to story building.

Creative businesses taking part in the programme will benefit from research insight, support from the StoryFutures team, input from our industry advisors Sol Rogers, Lisa Gray and Fiona Kilkelly, and £8,000 in R&D funding for each project. They will deliver early-stage prototypes in Autumn 2022, building towards further R&D, funding, and commercial opportunities.

You can find out more about each project below:

Unit 9: An Actor Virtually Prepares (Actor training for virtual production)

SME Lead: Peter Bathurst

Academic Partners: Dr Will Shüler and Professor Jen Parker-Starbuck (Drama)

What impact does working in the new spaces of Volume LED and Hybrid Virtual Production have on actors and their performances? Cinematographer Peter Bathurst and theatre academics Dr Will Shüler and Professor Jen Parker-Starbuck will research this practically with actors by restaging narrative elements previously captured in ‘real world’ environments. This will help us understand the challenges inherent in these new forms and ultimately provide material for expandable workshops and short courses to help introduce these skills to other performers. This project will culminate in the creation of a ‘toolkit’ to help actors understand these spaces, navigate new and developing experiences, demystify the technology and jargon, and establish best practice.

Anna Valley: Virtual Production Workflows

SME Leads: Christina Nowak and Mark Meggy

Academic: Armando Garcia (Media Arts)

This R&D project will investigate and begin to solve some of the foundational problems which exist within the creative realm of virtual production. The aim is to encourage and aid access for users who are new to the technology whilst looking to develop methodologies for solving workflow compatibility between teams of technologists, digital artists, lens-based technicians/artists, and performers alike.

This project will primarily work with finalists and recently graduated students from Royal Holloway’s (Ba) Games Art and Design and (Ba) Film, Television and Digital courses to explore a range of topics including colour management, asset output, camera and lens integration, render efficiency, and motion caption integration. Ultimately we would like this project to serve as a springboard for the creation of standards, whitepapers and learning resources to help cultivate the young talent the real-time industry is so desperately short of.

LIVR: Arc Ai (AI-led storytelling)

SME Lead: Phil Matthews

Academic: Dr Li Zhang (Computer Science)

Arc Ai is a collaborative project exploring the possibility of creating dynamic story plot generation via machine learning methods. It is the ambition of the team to develop story arcs using sample content influences including textural, imagery and video. We are aiming to create branching content that can be both immersive whilst maintaining a cohesive experience for the user. The innovation will be throughout the development resulting in a system that will take users on unique and evolving experiences that are influenced by their actions. No two experiences will be the same. We envisage the outcomes to be especially beneficial to the EdTech, Gaming and training sectors.

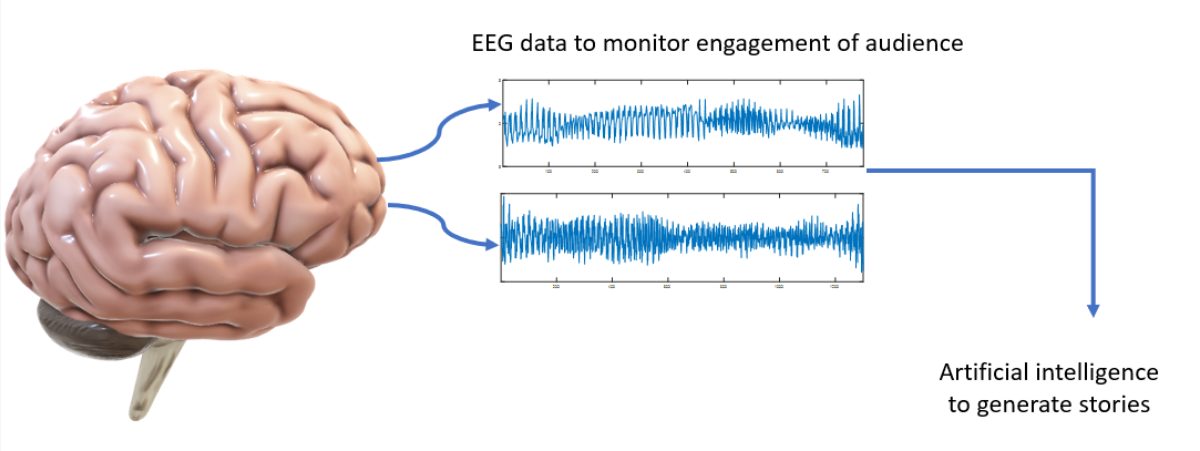

StoryFutures: Real-time Brain Computing for Storytelling

StoryFutures Lead: Johnny Johnson

Academic: Dr Clive Cheong Took (Electronic Engineering)

This R&D project will investigate how a person’s conscious and unconscious brainwaves could be used in determining the development of a narrative. The project will push the boundaries of what is possible in interactive storytelling through biometric inputs, and will utilize the latest technology in natural language processing AI to deliver a customised narrative story to every individual participant. We will investigate the complexities of neuroscience with an ambition to observe whether a person’s concentrated brain functions can control the development of an organic and interactive narrative.